简介 / About Us

EchoMimic

#1

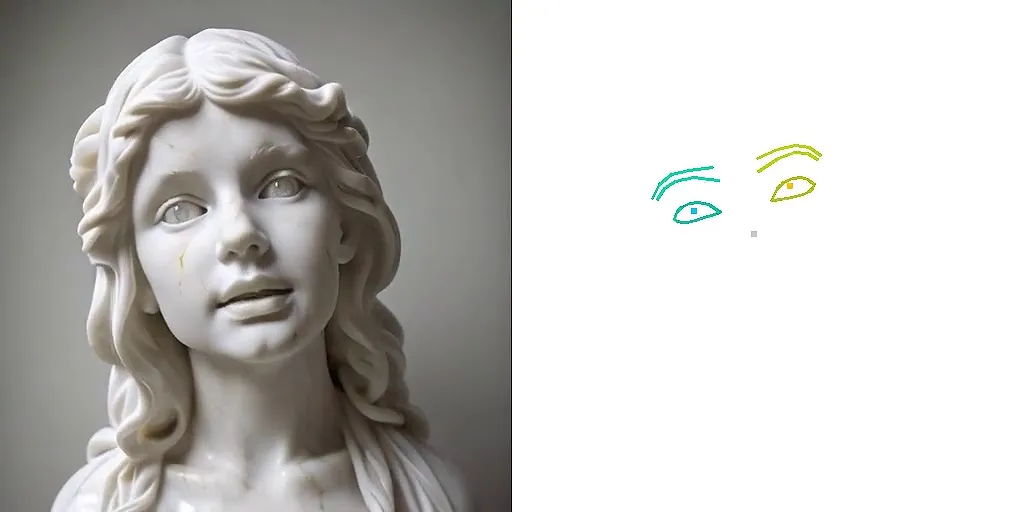

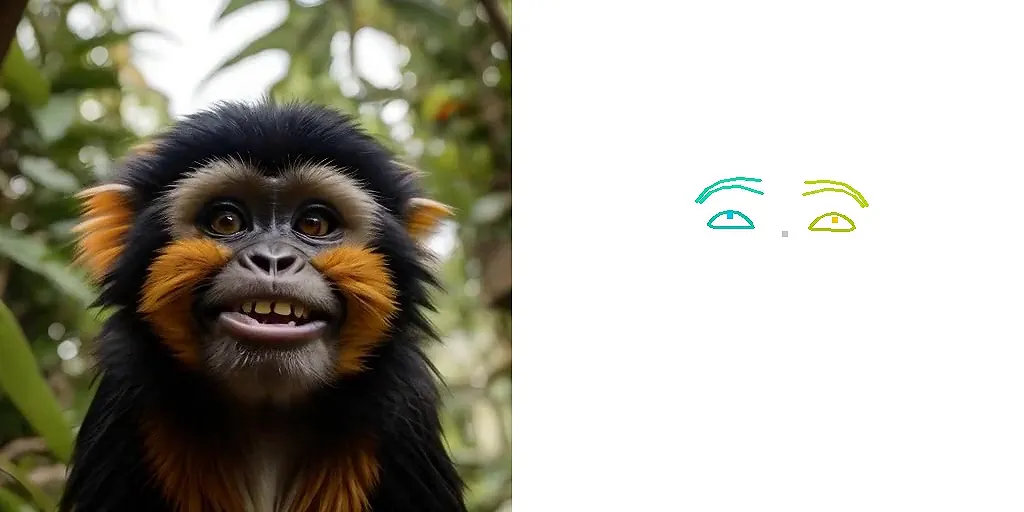

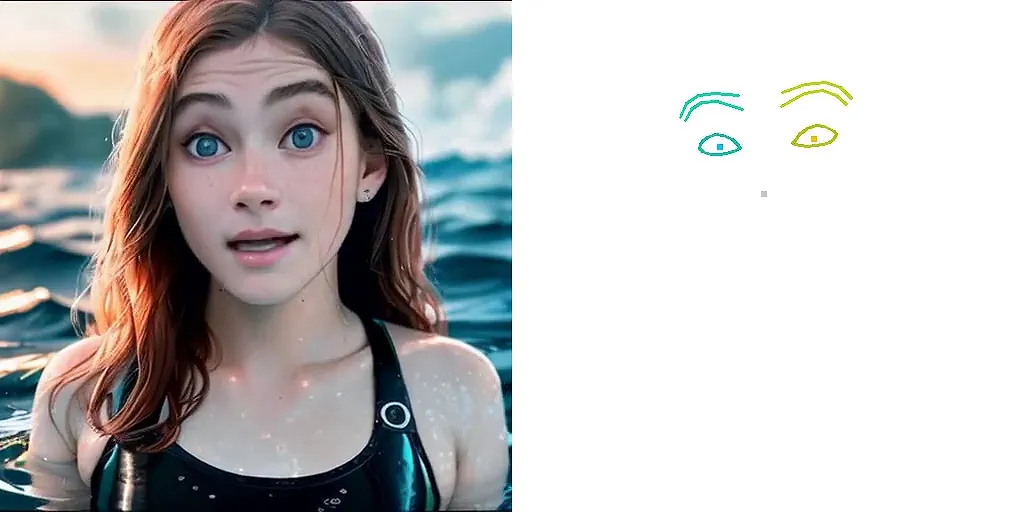

Illustrate / 说明

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

#2

Abstract / 摘要

Vitae auctor eu augue ut lectus. Malesuada pellentesque elit eget gravida, sit amet dictum sit amet justo donec enim.

#3

Gallery / 展示

Convallis posuere morbi leo urna molestie at elementum. Nunc pulvinar sapien et ligula ullamcorper. Lorem donec massa sapien faucibus.

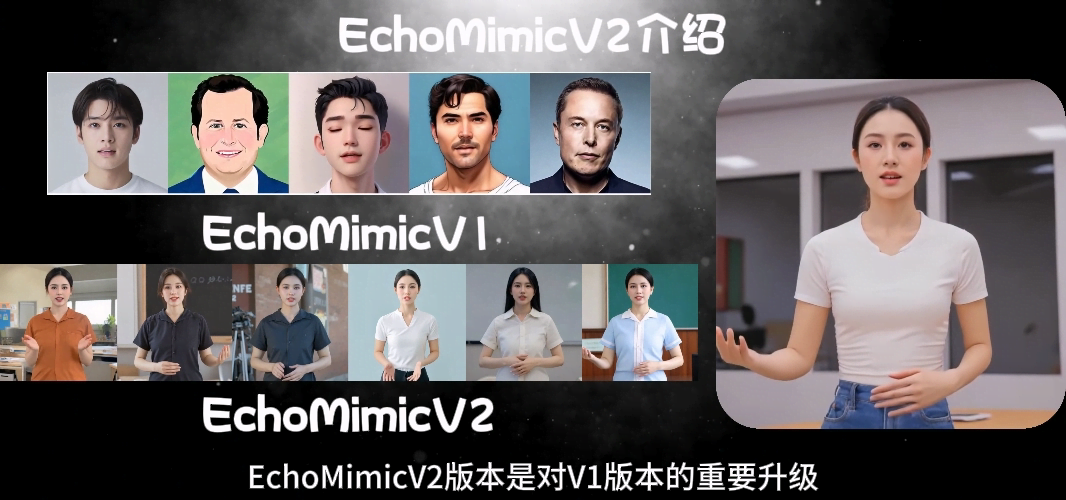

EchoMimicV2: Towards Striking, Simplified, and Semi-Body Human Animation EchoMimicV2: 向精简且半身体人类动画迈进

EchoMimicV1: Lifelike Audio-Driven Portrait Animations through Editable Landmark Conditioning.

EchoMimicV1: 生动的音频驱动肖像动画通过可编辑的地标条件实现。

EchoMimicV2: Towards Striking, Simplified, and Semi-Body Human Animation.

EchoMimicV2: 向着更具冲击力、简化且半身人体动画的方向发展。

[2025.02.27] 🔥 EchoMimicV2 is accepted by CVPR 2025.

[2025.01.16] 🔥 Please check out the discussions to learn how to start EchoMimicV2.

[2025.01.16] 🚀🔥 GradioUI for Accelerated EchoMimicV2 is now available.

[2025.01.03] 🚀🔥 One Minute is All You Need to Generate Video. Accelerated EchoMimicV2 are released. The inference speed can be improved by 9x (from ~7mins/120frames to ~50s/120frames on A100 GPU).

[2024.12.16] 🔥 RefImg-Pose Alignment Demo is now available, which involves aligning reference image, extracting pose from driving video, and generating video.

[2024.11.27] 🔥 Installation tutorial is now available. Thanks AiMotionStudio for the contribution.

[2024.11.22] 🔥 GradioUI is now available. Thanks @gluttony-10 for the contribution.

[2024.11.22] 🔥 ComfyUI is now available. Thanks @smthemex for the contribution.

[2024.11.21] 🔥 We release the EMTD dataset list and processing scripts.

[2024.11.21] 🔥 We release our EchoMimicV2 codes and models.

[2024.11.15] 🔥 Our paper is in public on arxiv.

———————————————————-

[2025.02.27] 🔥 EchoMimicV2被CVPR 2025接收。

[2025.01.16] 🔥 请查看讨论以了解如何开始EchoMimicV2。

[2025.01.16] 🚀🔥 加速版EchoMimicV2的GradioUI现已可用。

[2025.01.03] 🚀🔥 一分钟即可生成视频。加速版EchoMimicV2已发布。在A100 GPU上,推理速度可提高9倍(从大约7分钟/120帧提高到大约50秒/120帧)。

[2024.12.16] 🔥 现已提供RefImg-Pose对齐演示,该演示包括对齐参考图像、从驱动视频中提取姿态以及生成视频。

[2024.11.27] 🔥 安装教程现已可用。感谢AiMotionStudio的贡献。

[2024.11.22] 🔥 GradioUI现已可用。感谢@gluttony-10的贡献。

[2024.11.22] 🔥 ComfyUI现已可用。感谢@smthemex的贡献。

[2024.11.21] 🔥 我们发布了EMTD数据集列表和处理脚本。

[2024.11.21] 🔥 我们发布了EchoMimicV2的代码和模型。

[2024.11.15] 🔥 我们的论文已发布在arxiv上。

#1

ILLUSTRATE / 说明

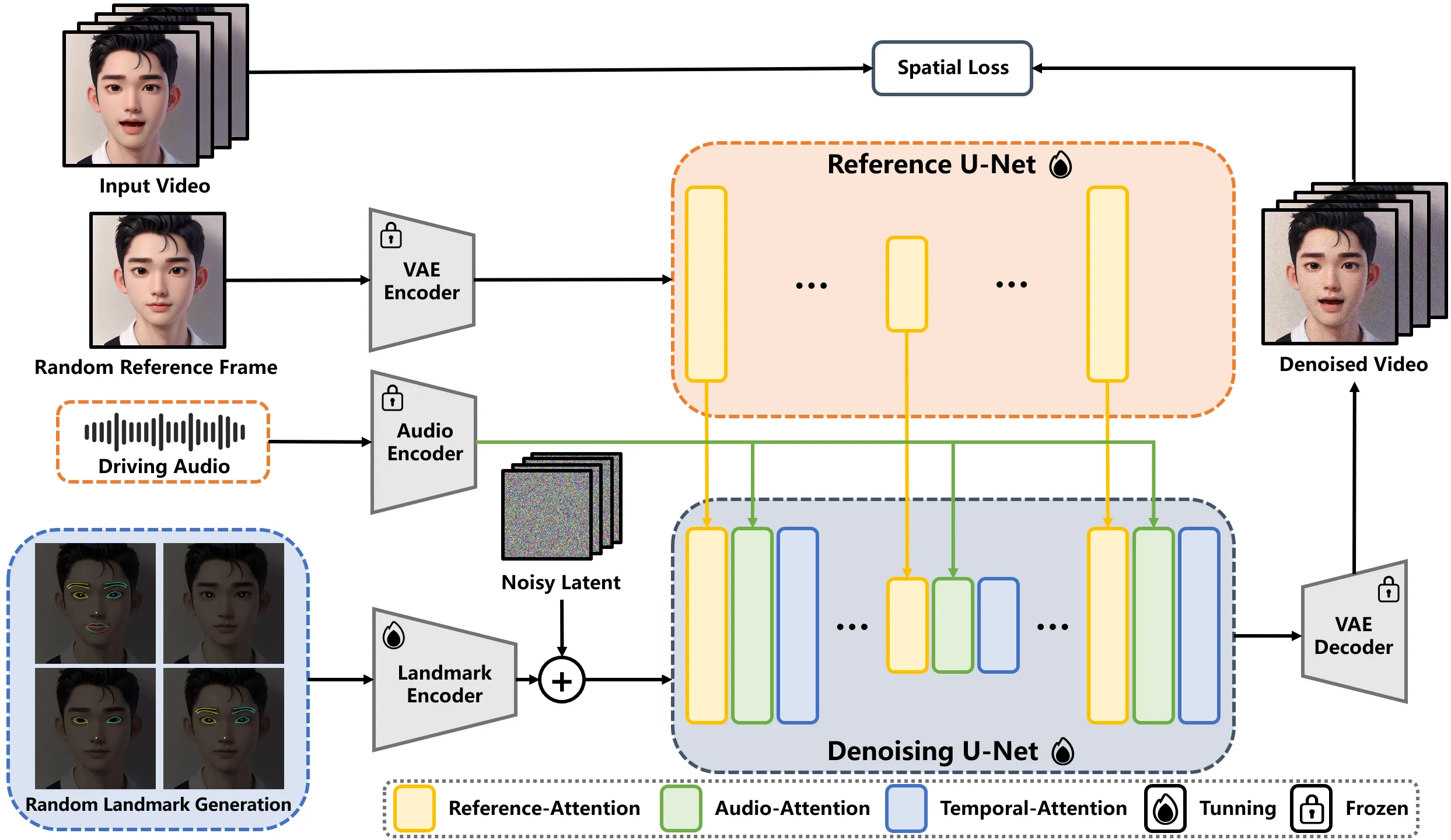

通过可编辑地标调节实现逼真的音频驱动肖像动画

Zhiyuan Chen* Jiajiong Cao* Zhiquan Chen Yuming Li Chenguang Ma *Equal Contribution. Terminal Technology Department, Alipay, Ant Group.

#2

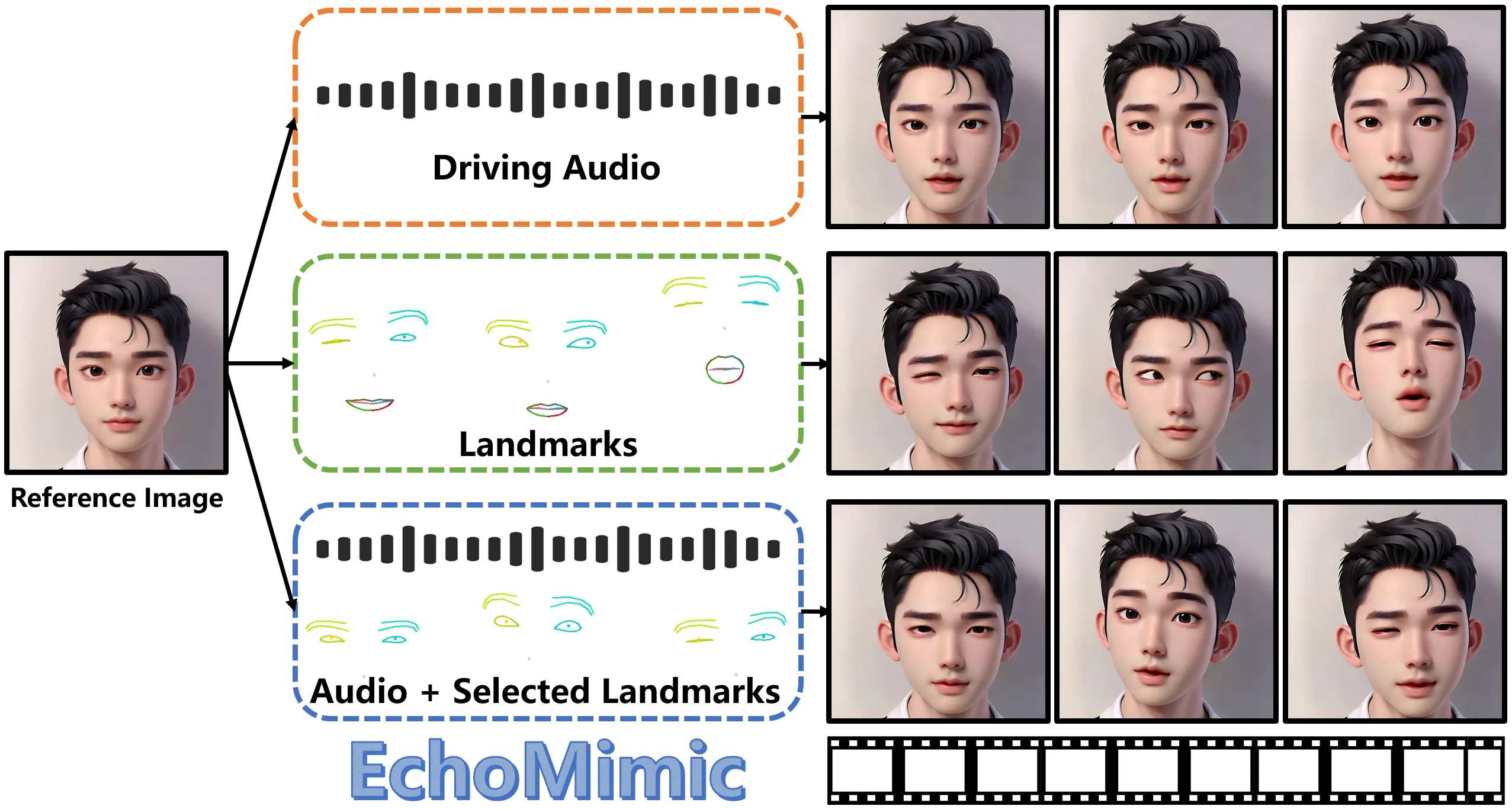

Abstract / 摘要

EchoMimic不仅能够通过单独的音频和面部标志生成肖像视频,还能够通过音频和选定面部标志的组合生成肖像视频。

EchoMimic is capable of generating portrait videos not only by audios and facial landmarks individually, but also by a combination of both audios and selected facial landmarks.

在音频输入的推动下,肖像图像动画领域在生成逼真和动态肖像方面取得了显著进展。传统方法仅限于利用音频或面部关键点将图像转换为视频,虽然它们可以产生令人满意的结果,但存在某些问题。例如,由于音频信号相对较弱,仅由音频驱动的方法有时可能不稳定,而仅由面部关键点驱动的方法虽然在驱动方面更稳定,但由于对关键点信息的过度控制,可能会导致不自然的结果。为了应对前面提到的挑战,本文介绍了一种新的方法,我们称之为EchoMimic。EchoMimic同时使用音频和面部标志进行训练。通过实施一种新颖的训练策略,EchoMimic不仅能够通过单独的音频和面部标志生成肖像视频,还能够通过音频和选定面部标志的组合生成肖像视频。EchoMimic已与各种公共数据集和我们收集的数据集中的替代算法进行了全面比较,在定量和定性评估方面都表现出了卓越的性能。其他可视化和源代码访问可以在EchoMimic项目页面上找到。

The area of portrait image animation, propelled by audio input, has witnessed notable progress in the generation of lifelike and dynamic portraits. Conventional methods are limited to utilizing either audios or facial key points to drive images into videos, while they can yield satisfactory results, certain issues exist. For instance, methods driven solely by audios can be unstable at times due to the relatively weaker audio signal, while methods driven exclusively by facial key points, although more stable in driving, can result in unnatural outcomes due to the excessive control of key point information. In addressing the previously mentioned challenges, in this paper, we introduce a novel approach which we named EchoMimic. EchoMimic is concurrently trained using both audios and facial landmarks. Through the implementation of a novel training strategy, EchoMimic is capable of generating portrait videos not only by audios and facial landmarks individually, but also by a combination of both audios and selected facial landmarks. EchoMimic has been comprehensively compared with alternative algorithms across various public datasets and our collected dataset, showcasing superior performance in both quantitative and qualitative evaluations. Additional visualization and access to the source code can be located on the EchoMimic project page.

#3

Gallery / 展示

Audio Driven (Chinese) 音频驱动(中文)

Audio Driven (English) 音频驱动(英文)

Audio Driven (Sing) 音频驱动(唱歌)

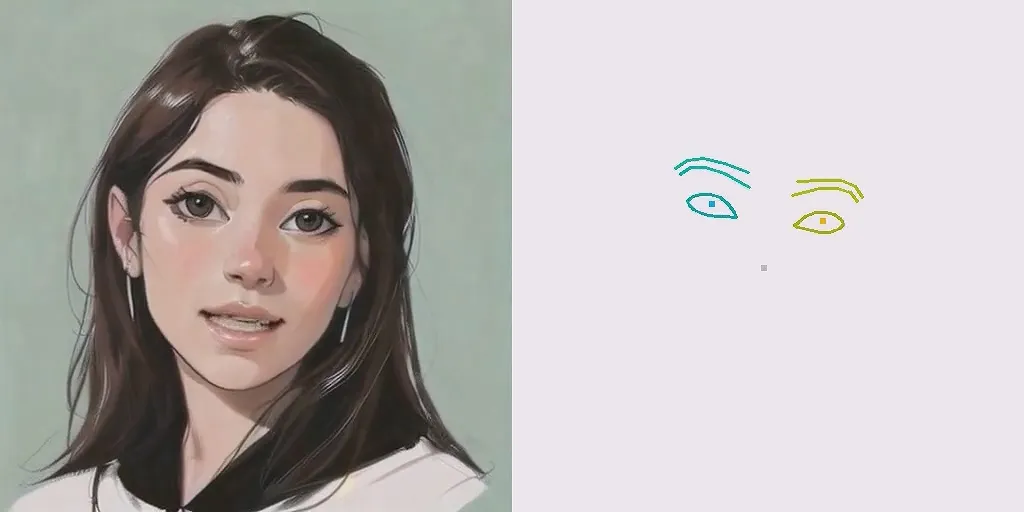

Landmark Driven